sonible’s Alexander Wankhammer explains how the neural networks of smart:gate were trained and gives insights into the decision-making and challenges behind the newest member of the smart: range.

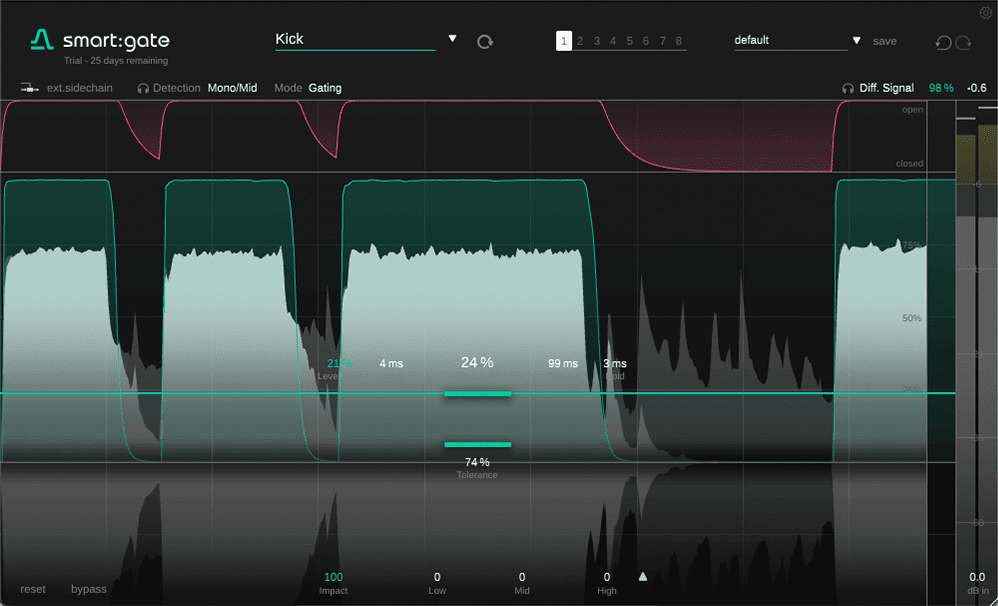

Our new plugin, smart:gate, is a content-aware audio gate that can perceive the presence of its target instrument.

Just like with the other members of our smart: range, it approaches a traditional task in a new way, using artificial intelligence to aid the user along the way – not to try to do the whole job itself. In smart:gate, the detection stage is AI-powered, opening the gate when it recognizes the desired target source, and not relying on level alone. To explain more about the development process behind the plugin and its forward-thinking back-end, here’s sonible CMO Alexander Wankhammer to explain some of the nuts and bolts.

To explain more about the development process behind the plugin and its forward-thinking back-end, here’s sonible CMO Alexander Wankhammer to explain some of the nuts and bolts.

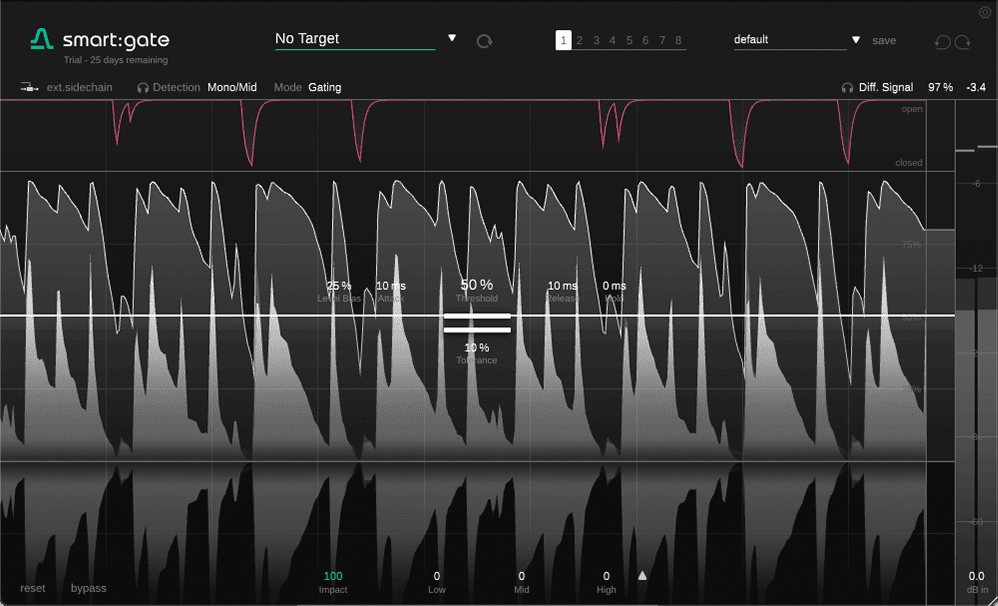

You’re able to gate stuff that you wouldn’t be able to gate otherwise. So let’s say you have some crosstalk in between the source parts, and that’s louder than the source. You couldn’t gate it using a normal gate, but with smart:gate you can easily do it because it just doesn’t react to the crosstalk.

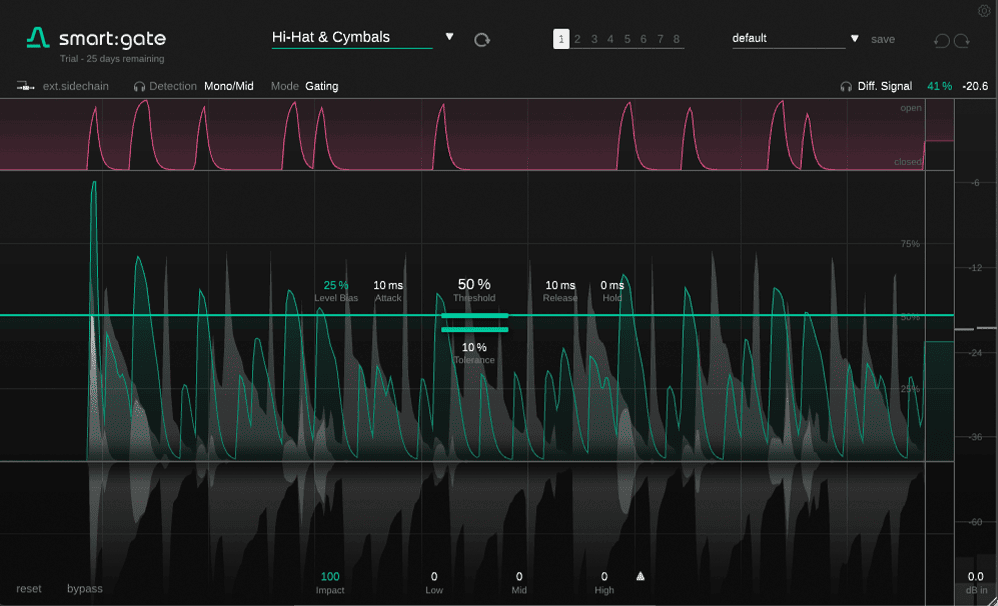

Also, if you have a signal that is for example very loud at some point and then quiet at other points, then if you use a minimum Level Bias setting, you don’t have to touch the level. smart:gate only looks at the presence of the signal – it doesn’t really care how loud it is. And for drums you can also split them; if you have a drum loop, you can separate the individual drum hits rather easily using the different profiles.

Our mission with the smart: range has been to create smarter versions of the building blocks of music production. We have smart:EQ, smart:comp and so on, but one of the building blocks that we had in mind was the gate.

A gate is tricky, on the one hand it’s a very simple tool and on the other hand it’s a very tricky tool, depending on what you’re trying to achieve. One thing that makes the gate so difficult to use sometimes is that the gate simply looks at the level and no matter what’s actually coming there it just opens and closes.

From quite early on, when we started experimenting with instrument recognition, we thought, “Wouldn’t it be nice if there was some real-time awareness of what’s coming in?” And only if the source signal that you want to let through is actually there, you open the gate. And if it’s not there, no matter how loud it is you keep the gate closed. This was the core idea and this is still the core idea of smart:gate.

When we develop something, typically we do prototyping in Python. A lot of machine learning is done in python. We also start initially without the restrictions that we have in a plugin – we don’t immediately try to do it in real-time; we try to do it offline and then we try to optimize it so it can become real-time capable.

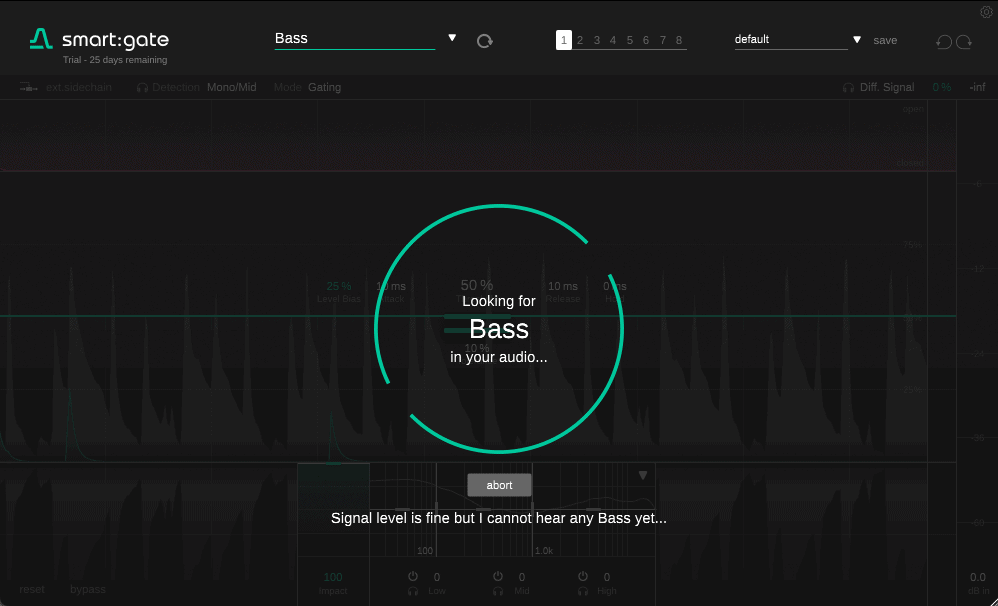

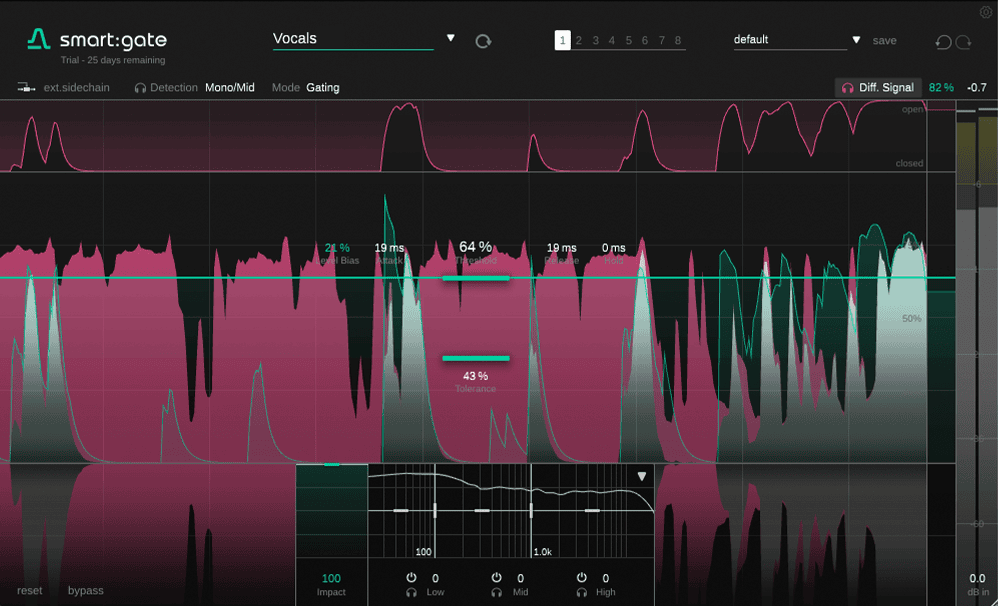

Initially, the first thing we tried to get right was vocals. One of the reasons why we started with vocals is because we have the biggest amount of training data there, so we knew that if we couldn’t do it for vocals then most likely we wouldn’t have been able to do it for other sources. It worked for vocals, and so we started trying to make it work for things like guitar and piano, then drums – drums are always interesting for gating.

It worked for vocals, and so we started trying to make it work for things like guitar and piano, then drums – drums are always interesting for gating.

When we started testing the drums, I had a pair of samples and one worked great and the other one didn’t. As a human listener, I couldn’t tell why and what the difference was, because for me they were both so similar in their characteristics. But for the neural network, there was something in there – in one of the samples – that just didn’t work.

In these situations, the answer is to send the network a higher variety of difference – more examples of what drums could sound like. And we have different definitions within drums, with kick, snare, and so on.

What you’re trying to do is present the system, ideally, any possible sound that could be, say, a kick drum. Obviously that’s not completely possible, but we have to keep the variety as high as possible so that no matter what kick type and tempo is coming in, the system understands, “OK, this is a kick”, and the same for all the other instruments too. For the snare, for example, it depends how the snare hits, the position and placement and tuning of the snare – everything. There are so many details, but as a human listener, you think “That’s a snare, that’s a snare, that’s a snare”. The AI is learning to identify, but for an automatic system it’s surprisingly difficult sometimes.

For the snare, for example, it depends how the snare hits, the position and placement and tuning of the snare – everything. There are so many details, but as a human listener, you think “That’s a snare, that’s a snare, that’s a snare”. The AI is learning to identify, but for an automatic system it’s surprisingly difficult sometimes.

What the network is actually trained on is estimating the RMS level of the target signal. So if you send in a mixture of the target signal and some other signals, the system tries to estimate the RMS of the target signal. It doesn’t try to recreate the target signal, with all its frequency information, because this would be much harder. The higher the RMS of the target compared to the mix, the higher the presence of the signal. That’s what it does.

It’s real-time, so every frame that’s coming in, it’s trying to say, “I have -15dB of piano in this frame, and the next frame -15.1dB, and then next frame…” Inside the plugin we use this information in two ways: one is to determine the source-to-mix ratio – or “target signal energy to whole mix energy”. If the crosstalk component is as loud as the target signal, then it would be 50/50. In the other signal if the mix is louder then you have an even lower value for that. It doesn’t depend on the absolute level of the signal, just in this case the relative level relation of target-to-mix.

You can try smart:gate for 30 days or get your license here. You can also get it as part of the smart:bundle.